How New Artificial Intelligence Program ChatGPT Will Transform Legal Practice

A new program called ChatGPT has “conversations” that look remarkably, well, “human.” And it’s going to change everything, including the way lawyers and the legal system work

A new program called ChatGPT uses powerful AI to generate documents that appear remarkably “human-like,” and lawyers are already starting to use it to generate legal documents;

Because ChatGPT is a “language model,” it generates documents by predicting what words are likely to come next, rather than using knowledge of the subject matter, and so sometimes it appears to “hallucinate,” i.e. to make up facts;

Although this early version has its flaws, ChatGPT is improving incredibly quickly, and as it does, it will become more reliable, and will then transform the legal world, and transform society.

ChatGPT-3 is a remarkable AI-powered chatbot that can answer questions and create an astonishing range of documents, from recipes, to computer code, to short stories, to, yes, legal briefs. The text it generates looks, for all the world, like it was written by a genius human being, albeit a genius who sometimes makes things up (more on that below).

ChatGPT-3 in action

You can see ChatGPT-3 for yourself by visiting the free version at chat.openai.com, (note that, due to demand, the site may be busy, and you may have to wait a bit.)

ChatGPT Drafts a Cease and Desist Letter

I asked ChatGPT to draft a “cease and desist” letter on behalf of a hypothetical client, Frank Jones, who is, hypothetically, being harassed by a debt collection agency about a debt that is owed by a different person with the same name.

The way you instruct ChatGPT is by typing in a “prompt.” Here’s a screenshot of the prompt that I used:

A few seconds later, ChatGPT-3 wrote this response:

Performance Evaluation for ChatGPT

As lawyers, we love to criticize (well, maybe you don’t, but many other lawyers do ….), and no doubt many lawyers reading the above could find things to improve, maybe even things that are wrong. As discussed further below, ChatGPT is by no means perfect. But it is nonetheless remarkable. And the most important thing about it is that the software behind it is improving every day, and six months from now, it will be far beyond where it is today.

Where did ChatGPT Come From?

ChatGPT, or, to be precise, ChatGPT-3, released in November 2022, is the latest release of a chatbot running on software called GPT. GPT was created by OpenAI (@OpenAI), an AI research lab founded in 2015.

GPT stands for “Generative Pre-trained Transformer.” “Generative,” because it “generates” text when prompted. “Pre-trained” because the algorithm was “trained” on a vast collection of texts, from thousands of published books and millions of websites. “Transformer,” because that is the particular type of algorithm that it uses. The “3” in GPT-3 indicates that it is the third major release of the software. OpenAI released ChatGPT-1 in 2018, ChatGPT-2 in 2019, and the current version, ChatGPT-3, in November 2022. We’re told that ChatGPT-4 is coming in the next few months, but there is no official release date yet.

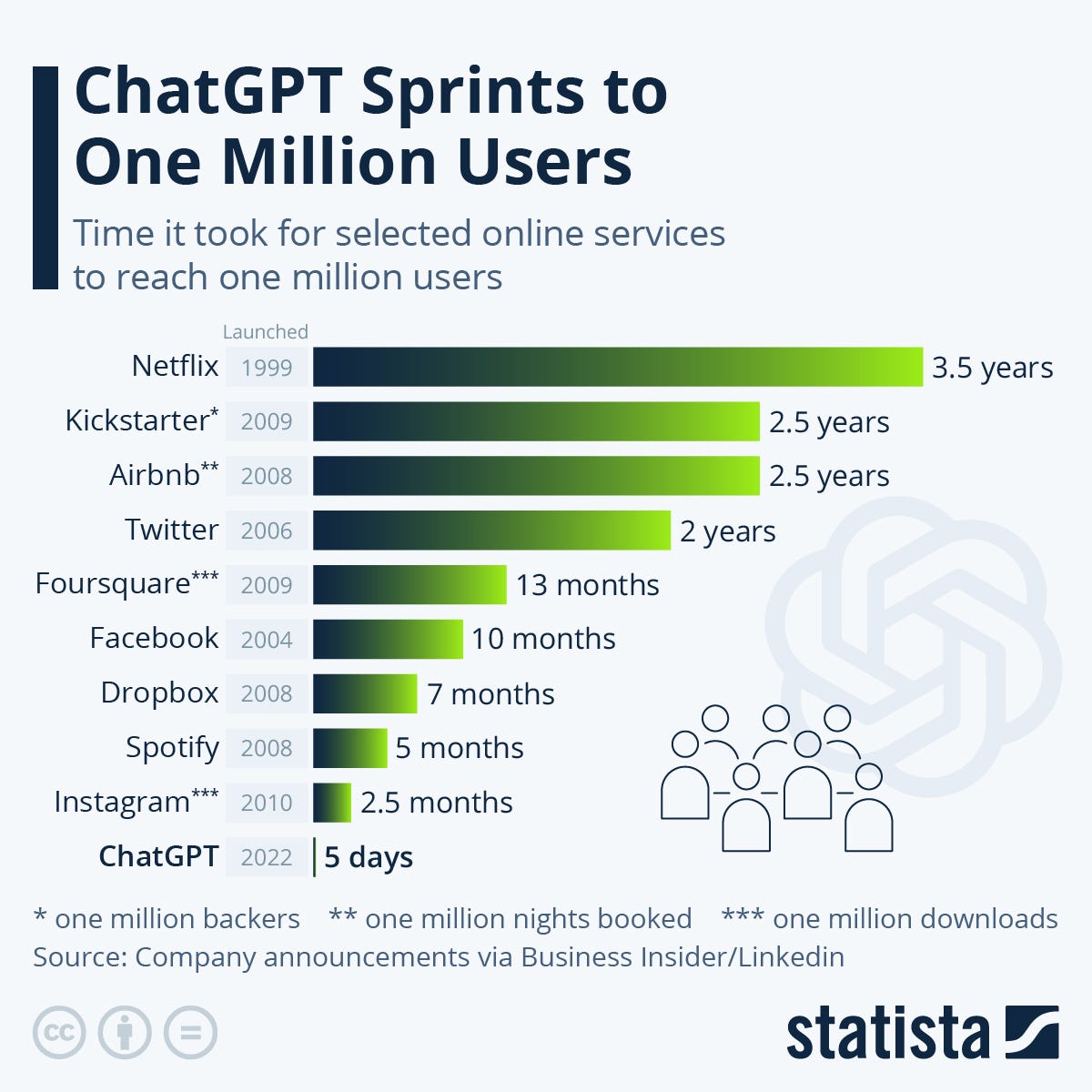

Since its release just a few months ago, ChatGPT-3 has seen incredible growth in popularity and has already hit over 100 million active users, making it the fastest growing consumer application of all time.

ChatGPT Looks Like Magic, and That Has Led to Lots of Confusion

The program was designed to simulate a text conversation that feels like you are talking to a super smart human being on the other end of the wire. This format makes it easy to use because it is familiar to us, the human users. But it has also led to a lot of confusion about exactly what ChatGPT is doing, and in particular, the types of mistakes that it is prone to make.

Think of ChatGPT Under the Hood as a Super Powerful “Autocomplete”

To vastly oversimply an incredibly complex piece of software, the basic idea of how ChatGPT works, is you can think of it as a super advanced version of the autocomplete on your phone when you are typing or texting. Or, the tool that lets Google provide suggestions when you start to type in a search.

In each case, you provide a string of words or letters, and then the software predicts additional words based on what you typed. This is based on what is known as a “language model.” A language model is a mathematical model that includes, among other things, data on the probability of certain words occurring together in a string of text. A language model can be used to predict, from a phrase or a string of words, what word is likely to come next in the string.

Predicting the Next Word In a Sentence

Let's say we take the string of words “Tom likes to eat ___________” and we want to predict what is going to be the next word in that string. (for a deeper dive on this example, see How ChatGPT really works, explained for non-technical people, by Guodong (Troy) Zhao)

We can't say with 100% certainty, but based on our knowledge of the English language, we can say that it's much more likely that the next word in that sentence is going to be “pizza” rather than “sawdust.”

ChatGPT has developed a huge amount of statistical knowledge to guess “which word comes next” because it’s been trained on a massive collection of texts. ChatGPT-1, was trained on 4.5 GB of text from 7000 unpublished books. ChatGPT-2 was trained on a much larger set of around 40 GB of documents. The current version, ChatGPT-3 was trained on 570 GB of text from websites, Wikipedia, and a huge collection of published books.

As Stephen Wolfram (@stephen_wolfram) explains

what ChatGPT is always fundamentally trying to do is to produce a “reasonable continuation” of whatever text it’s got so far, where by “reasonable” we mean “what one might expect someone to write after seeing what people have written on billions of webpages, etc.”

Stephen Wolfram, What Is ChatGPT Doing … and Why Does It Work?

A Document of a Thousand Words Begins With a Single Word: the Power of Adding One Word at a Time

Using all this data, GPT is amazingly good at predicting which word will come next in response to the prompt that you type in. But how does it go on to write multiple paragraphs of text?

The answer is: it just keeps repeating the process. At step 1, it takes the prompt that you type in, and figures out the next word. Then, in step 2, it takes that result, feeds it back into the algorithm, and figures out the next word after that. Same for step 3, step 4, etc.

In Wolfram’s hypothetical example, we start with the prompt, “The best thing about AI is its ability to ____________”

What word is likely to come next? According to this table, the best guess for the next word is “learn” (4.5% probability) the second best guess is “predict,” (3.5%).

In Wolfram’s hypothetical example, the model picks the word “learn” as the next word. (note, as discussed below, while “learn” is the most likely, at 4.5%, GPT won’t always pick “learn,” because there is a random component to each selection.)

Then – and this is the crucial step, GPT takes the resulting phrase “The best thing about AI is its ability to learn,” and then GPT treats that whole phrase as a new prompt for the next step. In other words, it just keeps going, adding one word at a time.

Randomness in the Model and Why ChatGPT Sometimes Says Crazy Wrong Things

If we always pick the top word on the list, i.e. “learn,” the result will be too rigid and not very helpful. It will be very consistent, but also kind of boring and useless (does that remind you of anyone you know?)

So, the folks at OpenAI have set up the system to incorporate a little bit of randomness. In the case of ChatGPT they can tune how much randomness using a parameter called “temperature.” Based purely on experience, it seems like a temperature of around 0.8 (on a scale of 0 to 1) is the Goldilocks temperature: enough randomness to keep it interesting, but not enough that it goes off the rails.

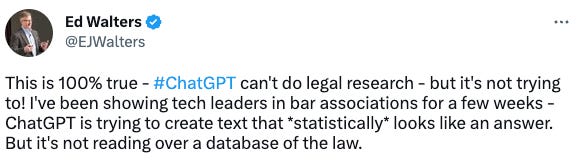

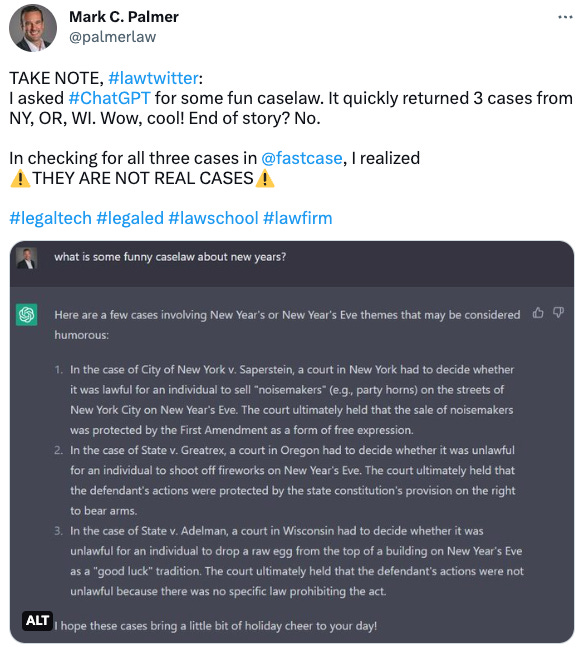

But sometimes, it still goes off the rails. For example, Mark Palmer (@palmerlaw), in a twitter thread, shared his experience with ChatGPT making up fake cases:

The Best Way to Understand What ChatGPT is Doing Is to Understand What It’s Not Doing

In response to the example above, Ed Walters (@EJWalters), CEO of legal research platform FastCase (@FastCase) explained that ChatGPT is repeatedly picking the next, most statistically likely word, not answering questions based on legal knowledge.

Citing the Stephen Wolfram article discussed above, he went on to explain that ChatGPT was making guesstimates based on language statistics, rather than doing legal research:

Here’s Why You Shouldn’t Count Out ChatGPT Just Yet

So, ok, but then what’s the point? If you had a junior staff member or legal assistant working with you and they were creative but tended to “make up” caselaw, what would you do? Well, likely you’d fire them.

But here’s why you should put ChatGPT on a performance improvement plan rather than giving it a pink slip just yet.

The point is it’s still early days, and things are moving fast. The point is that it works best when you use ChatGPT to generate or even brainstorm ideas rather than turning it loose.

The point is, it’s still learning. So are we.

But it’s only going to get better. So are we.

Watch this space.

Questions or comments? Please leave them below.

If you found this helpful, I’d be grateful if you could share it with anyone else who might find it interesting.